Natural Language Processing

Internship 2025

Career-Based Complete Artificial Intelligence Internship Program. Get Insights into: Python , Machine & Deep Learning concepts with Project Implementation and Assignments.

1/ 2 Months

Online

8+ Live Projects

Dual Certification

Ultimate Step towards your Career Goals: Expert in NLP Technologies

Get ahead with the FutureTech Industrial Internship Program: gain hands-on experience, connect with industry leaders, and develop cutting-edge skills. Earn a stipend, receive expert mentorship, and obtain a certificate to boost your career prospects. Transform your future with practical, real-world learning today

Internship Benifits

Mentorship

Receive guidance and insights from industry experts.

Hands-on Experience

Gain practical skills in a real-world cutting-edge projects.

Networking

Connect with professionals and peers in your field.

Skill Development

Enhance your technical and soft skills.

Career Advancement

Boost your resume with valuable experience.

Certificate

Get a certification to showcase your achievements.

NLP Internship Overview

Key Notes:

Comprehensive Learning: Covers both foundational and advanced concepts in NLP and machine learning.

Hands-On Projects: Practical projects to apply learned techniques.

Advanced Techniques: Detailed exploration of RNN, LSTM, and transformers.

Tools and Frameworks: Mastering tools like TensorFlow, PyTorch, and Hugging Face.

This course provides a deep dive into NLP, machine learning, and deep learning, equipping you with the skills to innovate and excel in these dynamic fields. Ready to elevate your expertise? Let’s get started!

Introduction to NLP and Machine Learning

Overview: Understand the basics and significance of Natural Language Processing (NLP) and machine learning.

Regex: Learn regular expressions for text pattern matching.

Pandas for NLP: Utilize Pandas for handling and analyzing text data.

Text Preprocessing Techniques

Tokenization, Stemming, Lemmatization, Stop Words: Fundamental steps to prepare text for analysis.

Bag of Words, TFIDF, Ngrams: Methods to convert text into numerical formats for machine learning.

Practical Applications

Sentiment Classification on Amazon Reviews: Analyzing customer sentiment using machine learning.

Deep Learning Basics

Introduction to Deep Learning: Basic concepts of deep learning.

TensorFlow Basics: Setting up and using TensorFlow for deep learning tasks.

Advanced Preprocessing

Word2Vec, Average Word2Vec: Techniques for word representation.

Implementation: Practical application of advanced preprocessing methods.

Recurrent Neural Networks (RNN) and Long Short-Term Memory (LSTM)

RNN and LSTM Architecture Walkthrough: Understanding the structure and functionality of RNNs and LSTMs.

Next Word Prediction Using LSTM: Building predictive text models.

Generating Poetic Text Using LSTM: Creative applications of LSTM for generating poetry.

Fake News Classifier Using Bidirectional LSTM: Detecting misinformation using Bidirectional LSTMs.

Sequence to Sequence (Seq2Seq) Models

Text Summarization Using LSTM Encoder-Decoder Architecture: Implementing Seq2Seq models for summarizing text.

Large Language Models (LLM)

Introduction to LLM and Transformer Architecture: Basics of large language models and transformers.

Hugging Face: Fine-tuning pretrained models on custom datasets.

LangChain Applications:

Chat with Books and PDF Files Using Llama 2 and Pinecone: Advanced NLP applications for document interactions.

Blog Generation LLM App Using Llama 2: Creating a blog generation application.

SQL Query LLM Using Google Gemini: Using LLM for SQL queries.

YouTube Video Transcribe Summarizer LLM App with Google Gemini: Summarizing YouTube videos.

Looking for in-depth Syllabus Information? Explore your endless possibilities in NLP with our Brochure!

share this detailed brochure with your friends! Spread the word and help them discover the amazing opportunities awaiting them.

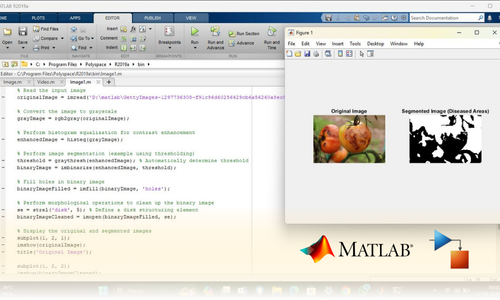

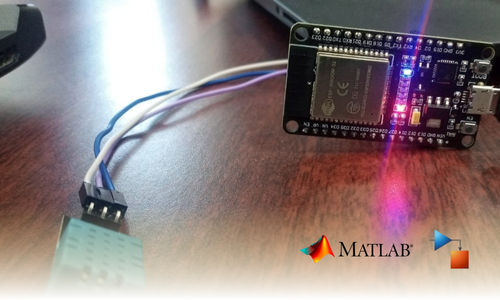

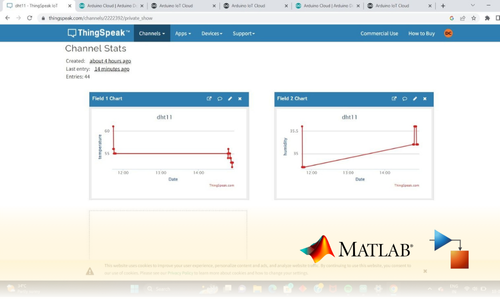

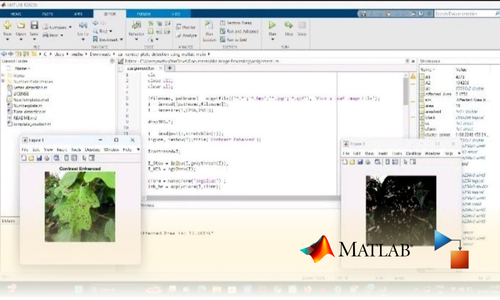

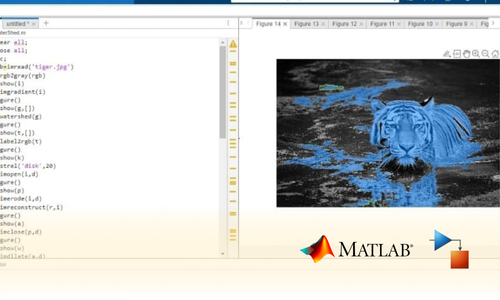

Project Submission: Example Output Screenshots from Our Clients

Take a look at these sample outputs crafted by our clients. These screenshots showcase the impressive results achieved through our courses and projects. Be inspired by their work and visualize what you can create!

Dual Certification: Internship Completion & Participation

Earn prestigious Dual Certification upon successful completion of our internship program. This recognition validates both your participation and the skills you have honed during the internship

How does this Internship Program Work?

Step 1 Enroll in the Program

- Get a Mentor Assigned

- Presentations & Practice Codes

- Learn at your Flexible Time

- Apprehend the concepts

Step 2 Project Development

- Implement Skills Learn

- Develop Projects with assistance

- Get Codes for Reference

- Visualise the Concepts

Step 3 Get Certified

- Certificate of Internship

- Project Completion Certificate

- Share on social media

- Get Job Notifications

Choose Your Plan fit your needs

Master the Latest Industrial Skills. Select a technology domain & kick off your Internship immediately.

1 Month

₹1999/-

₹999/-

- Internship Acceptance Letter

- 90 Days from the date of payment

- 4 LIVE intractive Mastermind Sessions

- 4+ Capstone Projects & Codes

- Full Roadmap

- Internship Report

- 1 Month Internship Certificate

2 Month

₹3299/-

₹1899/-

- Internship Acceptance Letter

- 180 Days from the date of payment

- 4 LIVE interactive Mastermind Sessions

- 12+ Capstone Projects & Codes

- Full Roadmap

- Internship Report

- Participation Certificate

- 2 Month Internship Certificate

Our Alumni Employers

Curious where our graduates make their mark? Our students go on to excel in leading tech companies, innovative startups, and prestigious research institutions. Their advanced skills and hands-on experience make them highly sought-after professionals in the industry.

EXCELLENTTrustindex verifies that the original source of the review is Google. I recently completed my Python internship under the guidance of Mentor poongodi mam We learnt so many new things that developed my knowledge.this experience is good to learnTrustindex verifies that the original source of the review is Google. I completed my python internship guidance of mentor poongodi mam. She thought us in friendly qayTrustindex verifies that the original source of the review is Google. Poongodi mam done very well She took the class very well When we ask any doubt without getting bored she will explain,we learned so much from mam,marvelousTrustindex verifies that the original source of the review is Google. I have handled by poongodi mam.domain python intership...was goodTrustindex verifies that the original source of the review is Google. I recently completed Python internship under the guidance of poongodi mam who excelled in explaining concepts in an easily understandable wayTrustindex verifies that the original source of the review is Google. Fantastic class we were attended..we got nice experience from this class..thank you for teaching python mam...Trustindex verifies that the original source of the review is Google. -The course content was well-structured - I gained valuable insights into microcontrollers, sensors, and programming languages- The workshop was informative, interactive, and challenging, pushing me to think creatively. Ms Jimna our instructor her guidance and feedback helped me overcome obstacles and improve my skills.Trustindex verifies that the original source of the review is Google. The learning experience was really worth since more than gaining just the knowledge all of the inputs were given in a friendly and sportive manner which then made it a good place to learn something with a free mindset... 👍🏻Trustindex verifies that the original source of the review is Google. I recently completed my full stack python intership under the guidance of mentor Gowtham,who excelled in explaining concepts in an easily understand mannerTrustindex verifies that the original source of the review is Google. Gowtham-very interesting class and I learning so many things in full stack python development and I complete my internship in Pantech e learning and it is useful for my career

FAQ

What are transformers in NLP?

Transformers are a type of deep learning model that has revolutionized NLP. They use self-attention mechanisms to process input text in parallel, making them faster and more efficient than previous architectures like RNNs and LSTMs. Transformers are the foundation for state-of-the-art models like:

- BERT (Bidirectional Encoder Representations from Transformers): Focuses on understanding context from both directions (left-to-right and right-to-left).

- GPT (Generative Pre-trained Transformer): A model designed for text generation tasks, including chatbots and creative writing.

- T5 (Text-to-Text Transfer Transformer): Converts all NLP tasks into a text-to-text format, making it highly versatile.

What are word embeddings?

Word embeddings are a way to represent words in a dense, continuous vector space. Unlike one-hot encoding, which is sparse, word embeddings capture semantic relationships between words. Words with similar meanings tend to be closer in vector space. Popular word embedding models include:

- Word2Vec

- GloVe

- FastText

What is the difference between rule-based and machine learning-based NLP?

Rule-based NLP: Involves creating a set of explicit rules for language processing. It’s often based on linguistic patterns and syntax rules. This approach is rigid and typically struggles with the complexity of natural language.

Machine Learning-based NLP: Uses algorithms to learn from data and make predictions. It can adapt to new contexts, handle ambiguity better, and is often used for tasks like classification, translation, and text generation. Deep learning-based models (e.g., transformers) are particularly popular for this.

What are some common NLP libraries?

- spaCy: A fast and efficient library for processing text, supporting a wide range of NLP tasks.

- NLTK (Natural Language Toolkit): A popular Python library for text processing and analysis.

- Transformers (by Hugging Face): A library that provides access to pre-trained models for various NLP tasks, including BERT, GPT, and others.

- TextBlob: A simpler NLP library for basic tasks like part-of-speech tagging, noun phrase extraction, and sentiment analysis.

- Gensim: Specialized for topic modeling and document similarity tasks.

What are some popular NLP techniques?

- Tokenization: Splitting text into smaller units such as words or sentences.

- Stemming and Lemmatization: Reducing words to their root forms (e.g., “running” → “run”).

- Word Embeddings: Representing words in a continuous vector space to capture semantic relationships (e.g., Word2Vec, GloVe).

- Transformers: A deep learning architecture that has revolutionized NLP tasks, especially through models like BERT, GPT, and T5.

- Attention Mechanisms: A technique that helps models focus on the most relevant parts of the input text.

- Pre-trained Models: Using models like BERT, GPT, and T5 that are pre-trained on large corpora and fine-tuned for specific tasks.

What are the key challenges in NLP?

- Ambiguity: Words can have multiple meanings depending on context (e.g., “bank” can mean a financial institution or the side of a river).

- Contextual Understanding: Understanding the context and nuance of phrases is difficult for machines.

- Slang, Dialects, and Idioms: Informal language, regional dialects, and idiomatic expressions can be hard for models to interpret.

- Multilinguality: Handling multiple languages and translating between them accurately.

- Data Quality: NLP models require vast amounts of high-quality, annotated data, which is time-consuming and expensive to collect.

- Bias and Fairness: NLP models can inherit biases from the data they are trained on, leading to unfair or discriminatory outcomes.

Start Your Tech Journey Today

Sign Up for Exclusive Resources and Courses Tailored to Your Goals!

© 2025 pantechelearning.com