Autonomous Vehicle Design

Internship 2025

Career-Based Complete Autonomous Vehicle Design Internship Program. Get Insights into: MATLAB, SIMULINK, BMS, SIMSCAPE, Vehicle Dynamics, Force Calculation concepts with Project Implementation and Assignments. Stay Updated on Latest Industrial Updates.

1/ 2 Months

Online

8+ Live Projects

Dual Certification

Ultimate Step towards your Career Goals: Expert in Autonomous Vehicle Design

Get ahead with the FutureTech Industrial Internship Program: gain hands-on experience, connect with industry leaders, and develop cutting-edge skills. Earn a stipend, receive expert mentorship, and obtain a certificate to boost your career prospects. Transform your future with practical, real-world learning today

Internship Benifits

Mentorship

Receive guidance and insights from industry experts.

Hands-on Experience

Gain practical skills in a real-world cutting-edge projects.

Networking

Connect with professionals and peers in your field.

Skill Development

Enhance your technical and soft skills.

Career Advancement

Boost your resume with valuable experience.

Certificate

Get a certification to showcase your achievements.

Autonomous Vehicle Design Internship Overview

Autonomous vehicles rely on multiple sensors to perceive their surroundings and make informed decisions. The main types of sensors are:

Camera

- Function: Cameras provide visual data, capturing images and video feeds of the surrounding environment. This sensor is crucial for detecting lane markings, traffic signals, signs, and pedestrians.

- Use Cases: Object detection, traffic sign recognition, lane-keeping, and pedestrian detection.

Radar (Radio Detection and Ranging)

- Function: Radar uses radio waves to detect objects and measure their distance, speed, and direction. Radar is less sensitive to environmental conditions like fog, rain, or low light compared to cameras.

- Use Cases: Detecting the speed and distance of vehicles ahead, adaptive cruise control, collision avoidance.

Lidar (Light Detection and Ranging)

- Function: Lidar uses laser beams to measure the distance between the sensor and objects in the vehicle’s environment. It creates a precise 3D map of the surroundings.

- Use Cases: High-resolution mapping, object detection, and obstacle avoidance.

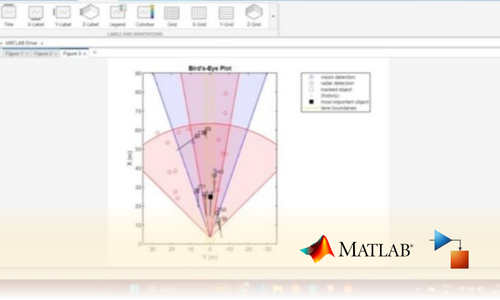

Understanding how each sensor in an autonomous vehicle “sees” the environment is critical for system development and testing. Visualizing sensor coverage, detection, and tracks allows engineers to assess the accuracy and reliability of sensor outputs.

Sensor Coverage: This refers to the area or volume that a sensor can monitor at any given time. For example, cameras may have a wide field of view but limited depth perception, while radar has less visual detail but better distance accuracy.

Detection: It involves identifying objects, vehicles, pedestrians, or obstacles within the sensor’s range. Sensors like radar can detect moving objects even in poor weather conditions, while cameras excel at identifying objects based on color, shape, or texture.

Tracking: This is the process of following the detected objects over time. For example, once a vehicle is detected, tracking algorithms estimate its trajectory and predict future positions.

Simulation is crucial for testing and validating autonomous vehicle systems. Engineers use simulation tools to replicate different driving scenarios and analyze how sensors behave under various conditions.

Modeling Sensor Output:

This involves creating realistic sensor models (e.g., camera, lidar, radar) that generate outputs similar to those produced by real-world sensors. These outputs include detection ranges, object locations, and velocities.

Driving Scenarios:

Using MATLAB and Simulink, you can simulate a wide range of driving scenarios, such as city driving, highway driving, and more challenging environments like inclement weather or low-light conditions.

Tools for Simulation:

MATLAB/Simulink provides tools like Automated Driving Toolbox and Driving Scenario Designer that allow you to model and simulate sensor data (camera, radar, lidar) and assess vehicle performance.

The Ground Truth Labeller in MATLAB/Simulink is used to manually label or annotate sensor data (e.g., images, lidar, radar outputs) to provide the “ground truth” for training and validating machine learning models.

Load Ground Truth Signals:

You can import sensor data from various sources into MATLAB/Simulink and use the Ground Truth Labeller to label signals (such as objects detected by cameras or lidar). These labels provide accurate, real-world information for training algorithms.

Labeling Process:

The Ground Truth Labeller allows you to annotate objects, track their movement, and provide additional information like the type of object (vehicle, pedestrian, etc.), its speed, or its trajectory. Multiple signals can be labeled for use in multi-sensor fusion or multi-object tracking.

Export and Explore Ground Truth Labels:

Once labeling is complete, the labeled data can be exported for use in simulations or machine learning models. The labels can be explored using MATLAB tools for further analysis or integration into a larger workflow.

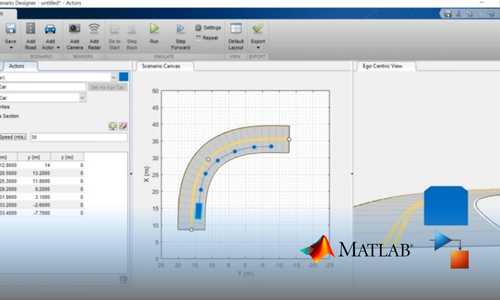

Creating realistic driving scenarios is essential for developing and testing autonomous systems.

Scenario Generation from Recorded Data:

You can use MATLAB/Simulink to generate driving scenarios based on real-world vehicle data. This could include data from cameras, lidar, radar, or GPS.

Programmatically Create Actors and Vehicle Trajectories:

MATLAB allows you to define actors (e.g., vehicles, pedestrians) and their trajectories programmatically. You can specify vehicle speed, position, and behaviors, such as turning or lane changes, for each actor in a simulation.

Driving Scenario Designer:

MATLAB’s Driving Scenario Designer provides a GUI that helps create and visualize complex driving environments, including road networks, traffic flow, and dynamic actors. This tool supports importing map data (such as OpenStreetMap) and defining realistic driving conditions.

Testing Open-Loop and Closed-Loop ADAS Algorithms:

Open-loop ADAS testing evaluates systems that don’t adapt based on feedback from sensors (e.g., path planning algorithms).

Closed-loop ADAS testing involves systems that use real-time sensor data (such as from cameras or radar) to adjust driving actions dynamically. The Driving Scenario App in MATLAB/Simulink allows you to simulate both open-loop and closed-loop scenarios for validating ADAS (Advanced Driver Assistance Systems) algorithms.

Import OpenStreetMap Data:

You can import real-world map data from OpenStreetMap into the Driving Scenario Designer to create more realistic scenarios that include road networks, intersections, and other infrastructure.

Looking for in-depth Syllabus Information? Explore your endless possibilities in Autonomous Vehicle Design with our Brochure!

share this detailed brochure with your friends! Spread the word and help them discover the amazing opportunities awaiting them.

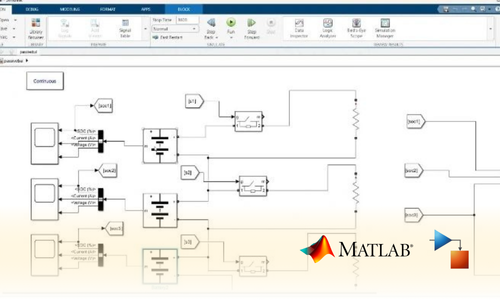

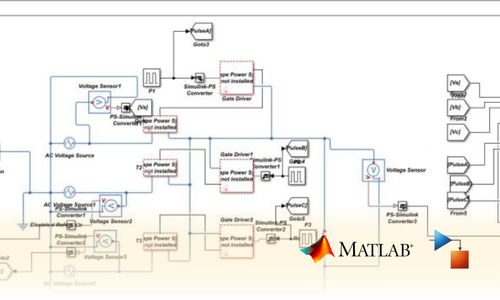

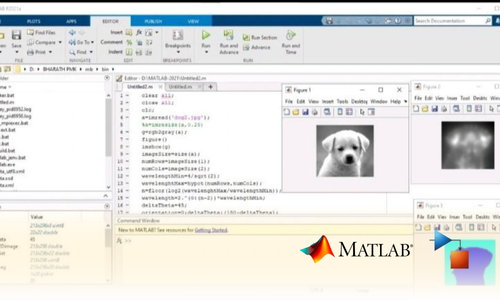

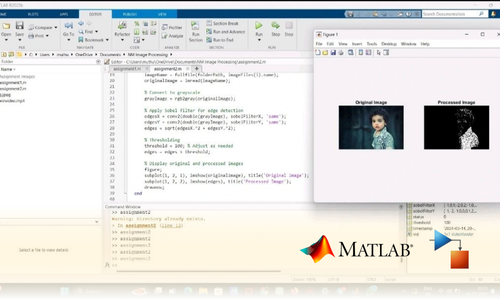

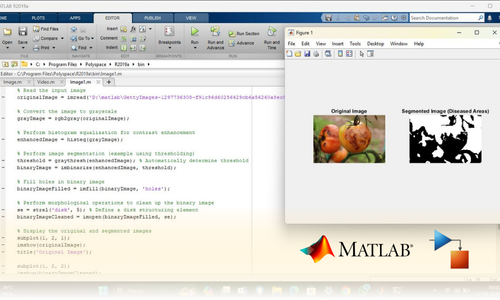

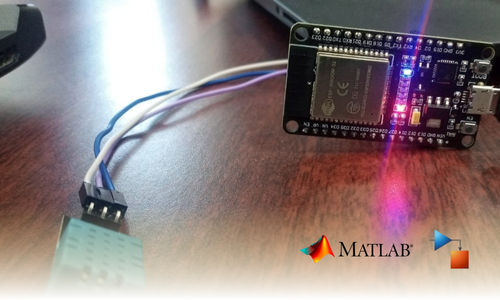

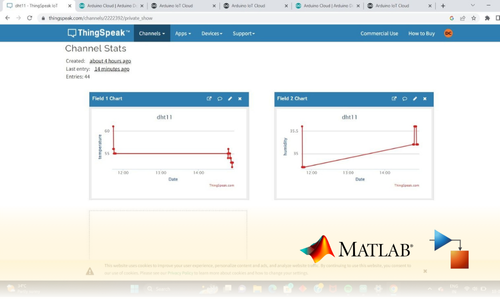

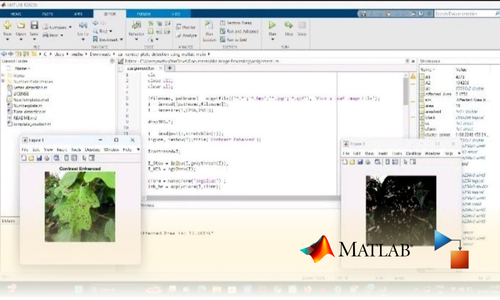

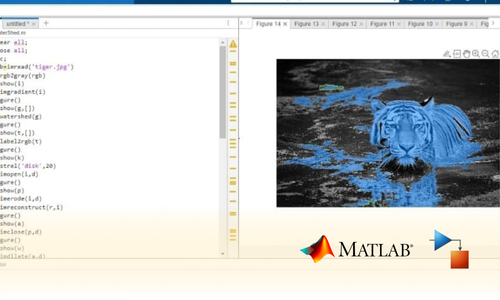

Project Submission: Example Output Screenshots from Our Clients

Take a look at these sample outputs crafted by our clients. These screenshots showcase the impressive results achieved through our courses and projects. Be inspired by their work and visualize what you can create!

Dual Certification: Internship Completion & Participation

Earn prestigious Dual Certification upon successful completion of our internship program. This recognition validates both your participation and the skills you have honed during the internship

How does this Internship Program Work?

Step 1 Enroll in the Program

- Get a Mentor Assigned

- Presentations & Practice Codes

- Learn at your Flexible Time

- Apprehend the concepts

Step 2 Project Development

- Implement Skills Learn

- Develop Projects with assistance

- Get Codes for Reference

- Visualise the Concepts

Step 3 Get Certified

- Certificate of Internship

- Project Completion Certificate

- Share on social media

- Get Job Notifications

Choose Your Plan fit your needs

Master the Latest Industrial Skills. Select a technology domain & kick off your Internship immediately.

1 Month

₹1999/-

₹999/-

- Internship Acceptance Letter

- 90 Days from the date of payment

- 4 LIVE intractive Mastermind Sessions

- 4+ Capstone Projects & Codes

- Full Roadmap

- Internship Report

- 1 Month Internship Certificate

2 Month

₹3299/-

₹1899/-

- Internship Acceptance Letter

- 180 Days from the date of payment

- 4 LIVE interactive Mastermind Sessions

- 12+ Capstone Projects & Codes

- Full Roadmap

- Internship Report

- Participation Certificate

- 2 Month Internship Certificate

Our Alumni Employers

Curious where our graduates make their mark? Our students go on to excel in leading tech companies, innovative startups, and prestigious research institutions. Their advanced skills and hands-on experience make them highly sought-after professionals in the industry.

EXCELLENTTrustindex verifies that the original source of the review is Google. I recently completed my Python internship under the guidance of Mentor poongodi mam We learnt so many new things that developed my knowledge.this experience is good to learnTrustindex verifies that the original source of the review is Google. I completed my python internship guidance of mentor poongodi mam. She thought us in friendly qayTrustindex verifies that the original source of the review is Google. Poongodi mam done very well She took the class very well When we ask any doubt without getting bored she will explain,we learned so much from mam,marvelousTrustindex verifies that the original source of the review is Google. I have handled by poongodi mam.domain python intership...was goodTrustindex verifies that the original source of the review is Google. I recently completed Python internship under the guidance of poongodi mam who excelled in explaining concepts in an easily understandable wayTrustindex verifies that the original source of the review is Google. Fantastic class we were attended..we got nice experience from this class..thank you for teaching python mam...Trustindex verifies that the original source of the review is Google. -The course content was well-structured - I gained valuable insights into microcontrollers, sensors, and programming languages- The workshop was informative, interactive, and challenging, pushing me to think creatively. Ms Jimna our instructor her guidance and feedback helped me overcome obstacles and improve my skills.Trustindex verifies that the original source of the review is Google. The learning experience was really worth since more than gaining just the knowledge all of the inputs were given in a friendly and sportive manner which then made it a good place to learn something with a free mindset... 👍🏻Trustindex verifies that the original source of the review is Google. I recently completed my full stack python intership under the guidance of mentor Gowtham,who excelled in explaining concepts in an easily understand mannerTrustindex verifies that the original source of the review is Google. Gowtham-very interesting class and I learning so many things in full stack python development and I complete my internship in Pantech e learning and it is useful for my career

FAQ

How does sensor fusion work in autonomous vehicles?

Sensor fusion combines data from multiple sensors (e.g., cameras, radar, lidar) to improve accuracy and reliability. It helps the vehicle make more informed decisions by providing a more complete understanding of its surroundings.

Can I import real-world map data for scenario creation?

Yes, MATLAB allows you to import real-world map data from sources like OpenStreetMap into the Driving Scenario Designer. This helps create realistic driving environments for testing AV systems.

How do I test ADAS algorithms in MATLAB?

ADAS algorithms can be tested in both open-loop (without real-time feedback) and closed-loop (using sensor feedback) configurations. The Driving Scenario App in MATLAB/Simulink allows you to simulate these scenarios and evaluate the performance of ADAS algorithms.

What is a driving scenario, and how is it created?

A driving scenario defines the environment and conditions in which an AV operates, including road layouts, traffic, and obstacles. In MATLAB/Simulink, you can create driving scenarios programmatically or using the Driving Scenario Designer tool, which allows you to simulate various driving conditions.

How do I label ground truth data for sensor signals in MATLAB/Simulink?

You can import sensor data into MATLAB and use the Ground Truth Labeller app to annotate objects in the data. Once labeled, this data can be exported for use in simulation or training AV algorithms.

What is the Ground Truth Labeller in MATLAB/Simulink?

The Ground Truth Labeller is a MATLAB/Simulink tool that allows you to manually annotate sensor data (like images or lidar points) to create labeled datasets. This is crucial for training and validating machine learning models used in AV systems.

Start Your Tech Journey Today

Sign Up for Exclusive Resources and Courses Tailored to Your Goals!

© 2025 pantechelearning.com